imagine you have 1000 uniques per key and 3 keys most likely you do not actually observe 1 billion key combinations but rather some much smaller number. If the maximum number of groups is large, "compress" the group index by using the factorize algorithm on it again.Compute a "cartesian product number" or group index for each observation (since you could theoretically have K_1 * … * K_p groups observed, where K_i is the number of unique values in key i).Factorize the group keys, computing integer label arrays (O(N) per key).Here are the steps for efficiently aggregating data like this:

Laying down the infrastructure for doing a better job is not simple. I know, because I did just that (take a look at the vbenchmarks). How does that look algorithmically? I guarantee if you take a naive approach, you will crash and burn when the data increases in size.

This is a small dataset, but imagine you have millions of observations and thousands or even millions of groups.

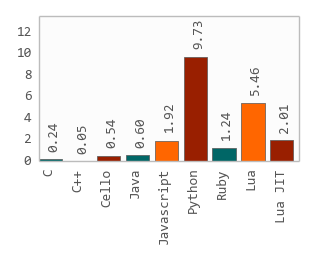

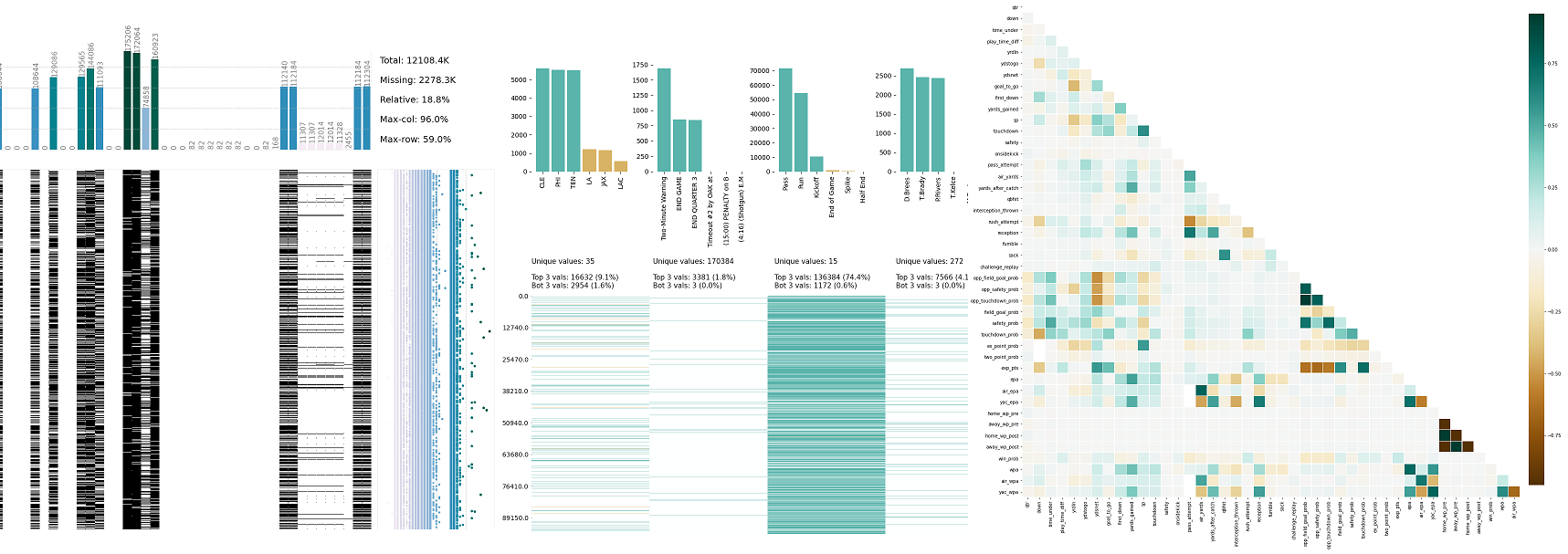

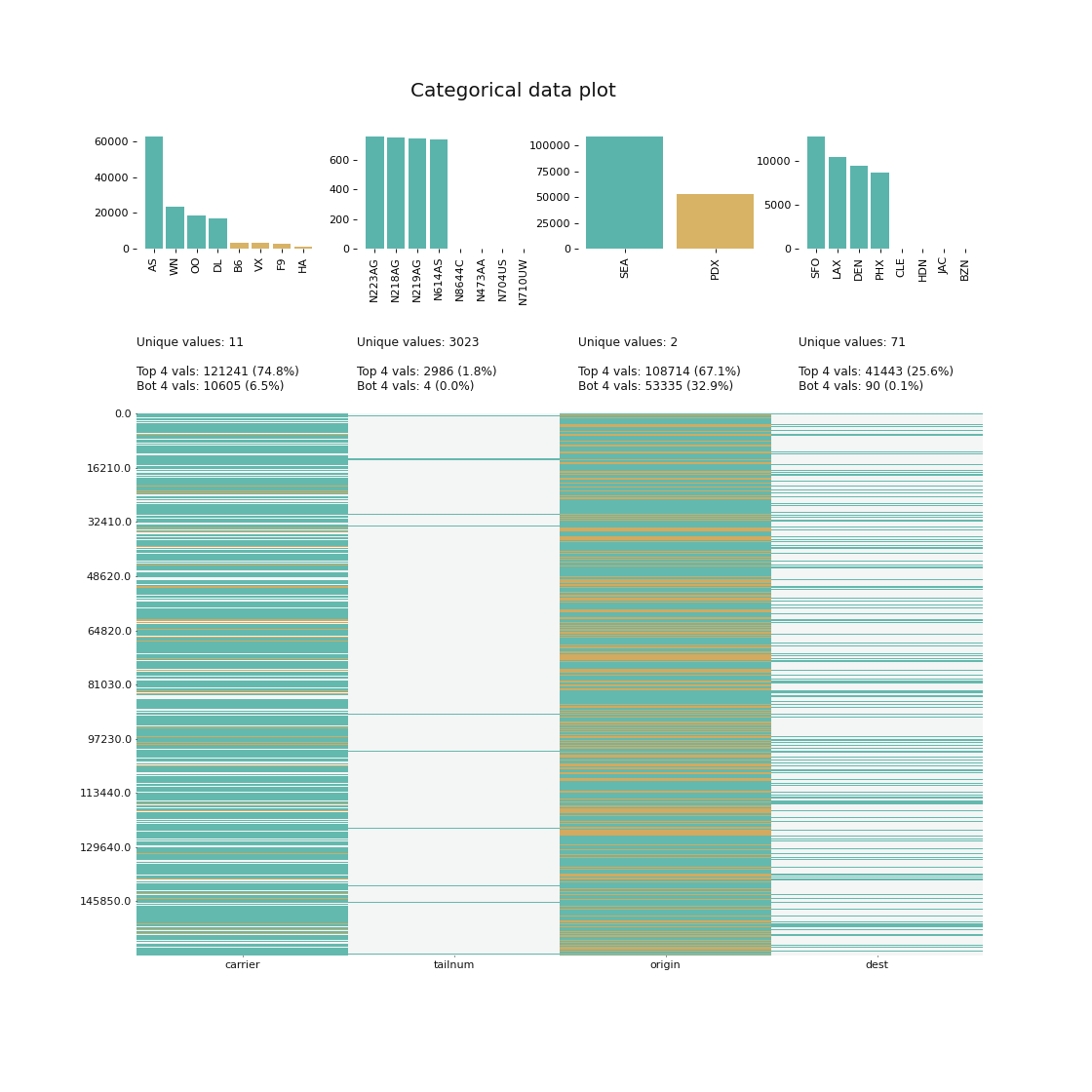

#Klib library python series

In : rng = date_range ( '', periods = 20, freq = '4h' ) In : ts = Series ( np. Suppose I had a time series (or DataFrame containing time series) that I want to group by year, month, and day: Let me give you a prime example from a commit yesterday of me applying these ideas to great effect. Vectorized data movement and subsetting routines: take, put, putmask, replace, etc.Failure to understand these two algorithms will force you to pay O(N log N), dominating the runtime of your algorithm. Combining this tool with factorize (hash table-based), you can categorize and sort a large data set in linear time. This is a variant of counting sort if you have N integers with known range from 0 to K - 1, this can be sorted in O(N) time. O(n) sort, known in pandas as groupsort, on integers with known range.I have this for critical algorithms like unique, factorize (unique + integer label assignment) Here are some of the more important tools and ideas that I make use of on a day-to-day basis: The reasons are two-fold: careful implementation (in Cython and and C, so minimizing the computational friction) and carefully-thought-out algorithms. I get asked a lot why pandas's performance is much better than R and other data manipulation tools. Those guys did a nice job of organizing the conference and was great to meet everyone there.Īs part of pandas development, I have had to develop a suite of high performance data algorithms and implementation strategies which are the heart and soul of the library.

0 kommentar(er)

0 kommentar(er)